NVIDIA CEO Jensen Huang opened the GTC 2022 keynote with the weather. NVIDIA researchers are working with universities around the U.S. on a new system that can track atmospheric rivers and predict catastrophic rain storms up to a week in advance.

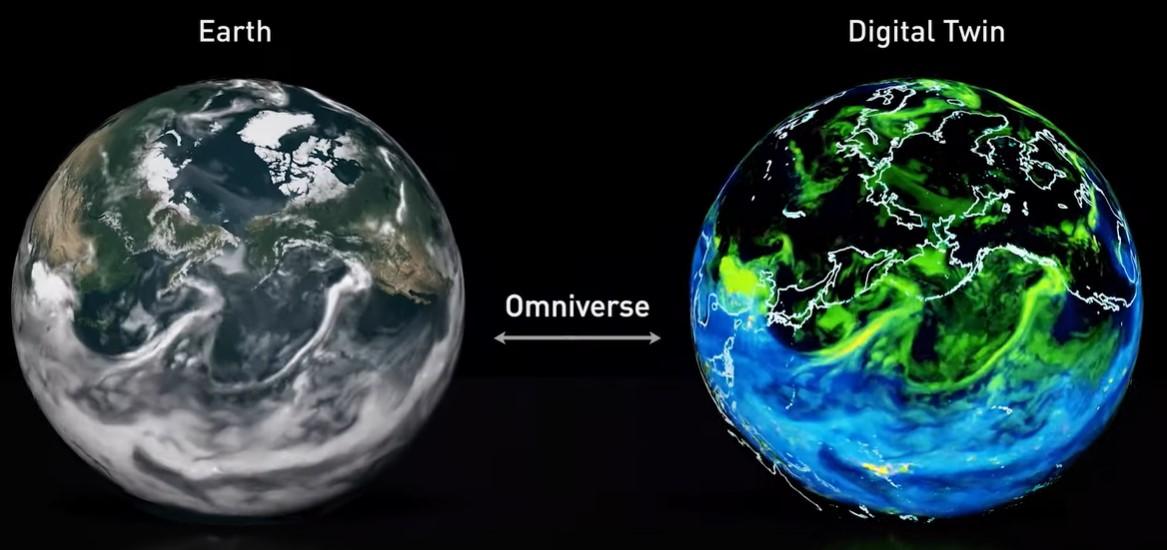

Researchers are building this forecasting model with Earth-2, a digital twin supercomputer, and FourCastNet, a physics-informed deep learning model that was trained on 40 years of weather data.

“For the first time a deep learning model has achieved better accuracy and skill on precipitation forecasting than state-of-the-art numerical models,” he said. “It makes predictions four to five orders of magnitude faster.”

NVIDIA’s world domination plans aren’t limited to weather forecasting. NVIDIA has chips, software, hardware and platforms to power the next evolution of the internet that go well beyond what Meta has in the works. At the kickoff to the week-long virtual conference for AI developers, Huang announced advances in almost every industry and every aspect of artificial intelligence. The company is moving forward quickly in each pillar of its AI strategy:

Huang described these virtual worlds as an omniverse, made possible by 20 years of advancements in graphics, physics, simulation AI and computing tech.

SEE: The metaverse: What is it?

Chirag Dekate, Ph.D.,VP and analyst of AI infrastructure, digital R&D, emerging technologies at Gartner, said NVIDIA’s GTC2022 announcements reinforce its AI leadership while setting up a foundation for sustained growth powered by a comprehensive strategy.

“The comprehensive strategy indeed positions NVIDIA in the lead of developing virtual worlds with potential for transformative enterprise impact,” Dekate said. “NVIDIA has raised the bar of what it takes to deliver leadership AI.”

Huang’s announcements ranged from robotics and warehouses to autonomous vehicles and mapping software to digital twins and omniverse collaboration software. The company also is developing networking products and new chips to power all these products.

“The compounding effect of this strategy results in a capability and value capture gap between NVIDIA and its peers,” Dekate said. “This gap has never been wider.”

SEE: Guardrail failure: Companies are losing revenue and customers due to AI bias

Dekate highlighted the H100, HGX H100, DGX 100, NVLink, SuperPod and Quantum 2 Infiniband, as highlights among the other dizzying array of advances NVIDIA announced.

“The Grace CPU superchip offers a highly customizable ARM-based platform that is likely to be core emerging infrastructure strategies across high performance computing, AI and edge computing,” he said.

Dekate also noted that companies can access NVIDIA’s innovations through their preferred innovation channels, which gives the company an edge over competitors.

Huang announced a lot of product news during the keynote, but he also explained how all the components worked together to enable digital twins. NVIDIA OVX operates digital twin simulations within NVIDIA Ominverse, a real-time world simulation and 3D design collaboration platform.

Designers, engineers and planners can use OVX to build physically accurate digital twins of buildings or create massive, true-to-reality simulated environments with precise time synchronization across physical and virtual worlds, according to the company. This platform includes Beyond Avatar, Holoscan, Drive, Issac and Metropolis.

Dekate sees OVX as a transformative platform that companies can use to capture value from industrial digital twins.

“Omniverse does to industrial digital twins what CUDA did to AI,” he said. “By expanding supported omniverse connectors nearly 10x, and curating a compelling robotics platform, NVIDIA has devised a persuasive ecosystem strategy that will catalyze its next growth phase.”

SEE: Risk reduction: Digital twins, big data and their place on the IT roadmap

During the keynote, Huang also talked to Toy Jensen, his own digital twin. TJ explained how audio and visual inputs are synthesized on the fly to generate these avatars. The demo showed how multiple NVIDIA components work together to make these digital humans possible, including Megatron, a transformer language model trained with 530 billion parameters. NVIDIA and Microsoft have worked on this project together to improve AI for natural language generation.

The OVX server consists of eight NVIDIA A40 GPUs, three NVIDIA ConnectX-6 Dx 200 Gbps NICs, 1TB system memory and 16TB NVMe storage. The system can scale from a single pod of eight OVX servers to a SuperPOD of 32 OVX servers connected with NVIDIA Spectrum-3 switch fabric or multiple OVX SuperPODs.

In addition to forecasting the world’s weather, NVIDIA is using its autonomous vehicle and mapping software to create a digital twin of the world’s roads.

“By the end of 2024, we expect to map and create a digital twin of all major highways in North America, Western Europe and Asia–about 500,000 kilometers,” he said.

The map will be expanded and updated by millions of passenger cars, Huang said. The company is also building an earth-scale digital twin to train its AV fleet and test new algorithms.

This map will come from data collected via NVIDIA’s AV platforms, including DRIVE Map, Hyperion and Orin. Huang introduced DRIVE Map during the keynote. This mapping platform collects data from multiple sources to improve redundancy and safety in autonomous driving. DRIVE Map collects data from three localization layers: camera, lidar and radar. The algorithm driving an autonomous car uses information from each individual layer to analyze a particular driving situation or confirm data from another source. The cameras collect map information such as lane dividers, road markings, traffic lights and signs. The radar is useful in low light and bad weather. The lidars create a 3D representation of the real world at a 5-centimeter resolution.

All this sensor data goes to Hyperion, NVIDIA’s open platform for automated and autonomous vehicles. The platform includes the computer architecture, sensor set and full NVIDIA DRIVE Chauffeur and Concierge applications. It is modular, so customers can select the features they need.

SEE: Top 5 autonomous car roadblocks

Hyperion 8 will ship in Mercedes Benz cars in 2024 and in Jaguars and Landrovers in 2025. Hyperion 9 will be ready for cars shipping in 2026 and includes 14 cameras, nine radars, three lidars and 20 ultrasonics. Ultrasonic sensors use high-frequency sound waves to measure the distance between objects within close range to a car. The platform will process twice the amount of sensor data compared to the previous version and uses two engines to collect and analyze data: ground truth survey mapping and crowd-sourced fleet mapping.

NVIDIA hasn’t forgotten its hardware roots amid all this talk of digital twins and virtual worlds. Huang announced several new hardware products to round out the fourth pillar of its AI strategy. This news included:

“Data centers are becoming AI factories — processing and refining mountains of data to produce intelligence,” Huang said.

Developers are an essential part of any tech ecosystem and NVIDIA has that component of its world domination plan covered as well. Huang announced Omniverse for Developers to support collaborative game development. New features for game developers include updates to Audio2Face, Nucleus Cloud, Deep Search and the Unreal Engine 5 Omniverse Connector.

The company also announced 60 updates to many of its CUDA-X libraries, tools and technologies including:

Learn the latest news and best practices about data science, big data analytics, and artificial intelligence.